As a pharmaceutical manufacturing CIO, you’re not just managing IT systems—you’re enabling traceability, compliance, and operational excellence in one of the most regulated and complex industries in the world.

With SAP ECC approaching end-of-life by 2027 and the global regulatory landscape tightening its grip on serialization, digital batch traceability, and product integrity, modernizing your ERP landscape is no longer optional—it’s mission-critical. And it begins with two things: Data and AI.

Let’s explore how CIOs can modernize their SAP landscape with a data-first approach, unlocking real-world AI use cases while maintaining regulatory integrity across the supply chain.

The Current State: ECC Limitations in a Regulated, AI-Driven World

SAP ECC has been the backbone of pharma operations for over two decades. But its limitations are now showing:

- Fragmented master data across plants and systems

- Custom-coded batch traceability that’s difficult to validate

- Limited support for real-time analytics or AI applications

- Gaps in native compliance with emerging global serialization mandates

These challenges are amplified when CIOs begin implementing AI-driven process optimization or integrating with serialization solutions like SAP ATTP. ECC simply wasn’t built for today’s speed, scale, or compliance needs. We have seen how pressing it could be while dealing with Covid-19 pandemic.

Why S/4HANA Matters — But Only With Clean Data

SAP S/4HANA promises much: real-time batch monitoring, embedded analytics, streamlined quality management, and a foundation for intelligent supply chains. However, the true value of S/4HANA only emerges when the data behind it is trusted, governed, and AI-ready.

In pharma, that means:

- GxP-aligned master data for materials, vendors, and BOMs

- Audit-ready batch records that can withstand FDA or EMA scrutiny

- Traceability of data lineage to support SAP ATTP and regulatory serialization audits

According to Gartner, over 85% of AI projects in enterprise environments fail due to poor data quality. In regulated pharma, that failure isn’t just technical—it’s regulatory risk.

Pharma’s Silent Risk Factor: Data Integrity

CIOs must recognize that data quality is not just a technical problem—it’s a compliance imperative.

ECC systems typically have:

- 20%+ duplicated materials or vendors

- Inconsistent inspection plans across manufacturing sites

- Obsolete or unvalidated test definitions

These issues compromise everything from SAP ATTP serialization feeds to digital twins and AI-based demand forecasting.

Solution:

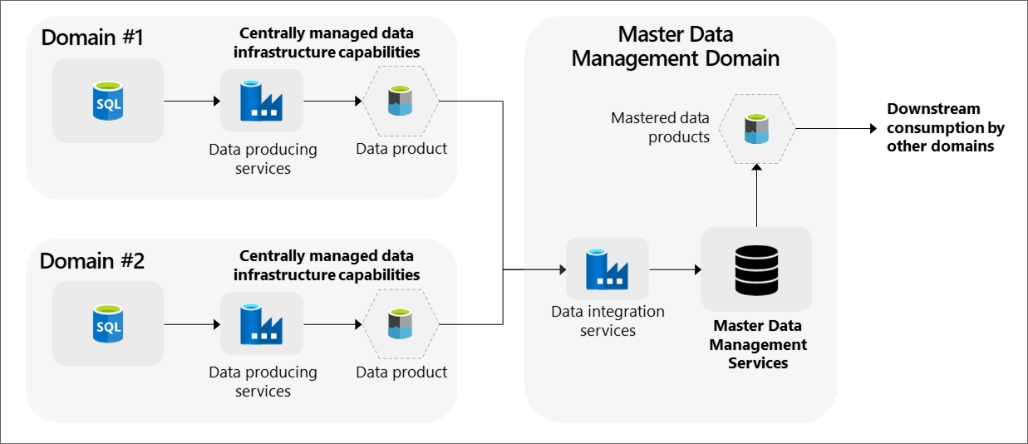

- Establish Master Data Governance (MDG) with GxP alignment

- Create a Data Integrity Index across key domains (Batch, BOM, Vendor)

- Implement audit trails for all regulated master and transactional data

AI-Driven Requirement Gathering: Accelerate Without Compromising

One of the most overlooked areas in S/4HANA modernization is blueprinting and requirement gathering. In pharma, this phase is long, compliance-heavy, and often fragmented.

Now, CIOs are leveraging Generative AI to:

- Analyze ECC transaction history to auto-generate process maps

- Draft validation-ready requirement documents based on SAP best practices

- Assist business users with smart conversational interfaces that document as-is and to-be states

This “AI-as-a-business-analyst” model is not just efficient—it helps standardize requirements and traceability, reducing the chance of non-compliant customizations.

SAP ATTP: Making Serialization a Core ERP Concern

Pharmaceutical CIOs are now expected to ensure end-to-end product traceability across the supply chain—from raw materials to patient delivery. SAP Advanced Track & Trace for Pharmaceuticals (ATTP) is purpose-built for this but depends heavily on ERP data being clean, structured, and integrated.

With the right foundation in S/4HANA and clean master data:

- SAP ATTP can serialize every batch and unit pack reliably

- AI models can predict risks in the supply chain (e.g., delayed shipments or counterfeit vulnerabilities)

- Quality teams can track deviations or holds with full digital genealogy of the product

ATTP isn’t just an add-on—it’s a compliance engine. But it only works if your ERP core is modern and your data is trusted.

GenAI for Quick Wins: Where to Start

For CIOs looking to showcase quick ROI, consider deploying GenAI in areas that complement your ERP investment and are validation-friendly:

- Digital SOP Assistants: AI bots that help QA teams find and summarize policies

- Batch Record Summarization: GenAI reading batch logs to flag potential anomalies

- Procurement Bots: Drafting vendor communication or PO summaries

- Training Content Generation: Automated creation of process guides for new ERP workflows

These use cases are low-risk, business-enabling, and help build AI maturity across your teams.

The CIO Playbook: Data, Traceability, and AI Governance

As you modernize, consider this framework:

| Pillar | CIO Responsibility |

| Data Integrity | Implement MDG, create Data Quality KPIs, enforce audit logs |

| AI Governance | Define use-case ownership, ensure validation where needed |

| Compliance by Design | Embed ALCOA principles into every ERP and AI workflow |

| Serialization Readiness | Integrate S/4HANA and ATTP for end-to-end traceability |

Final Thoughts: From ERP Modernization to Digital Pharma Leadership

Modernizing your ERP is not just about migrating systems—it’s about transforming your enterprise into a digitally intelligent, compliance-first, AI-augmented pharma organization.

CIOs must lead this transformation not from the data center—but from the boardroom. With the right data governance, a smart AI adoption roadmap, and strategic alignment with platforms like SAP ATTP, your ERP modernization journey will unlock more than efficiency—it will unlock trust, agility, and innovation.

Let data be your competitive advantage, and let compliance be your credibility.

Need help assessing your ERP data health or building your AI roadmap?

Let’s connect for a Data Integrity & AI Readiness Assessment tailored to pharma manufacturing.